synthetic expeditions

fragmented perceptions of Tokyo in transit

EM-EX exhibition

Keio University, KMD-EM

MediaArchitecture M.Sc.

December 2024

Carlos Garcia Fernandez, Ismael Rasa

A project that explores the use of minimal inputs to synthesise experiential episodes within the context of built environments. In this specific iteration, various audio and visual elements serve to render the experience of riding the JR Yamanote line around Tokyo.

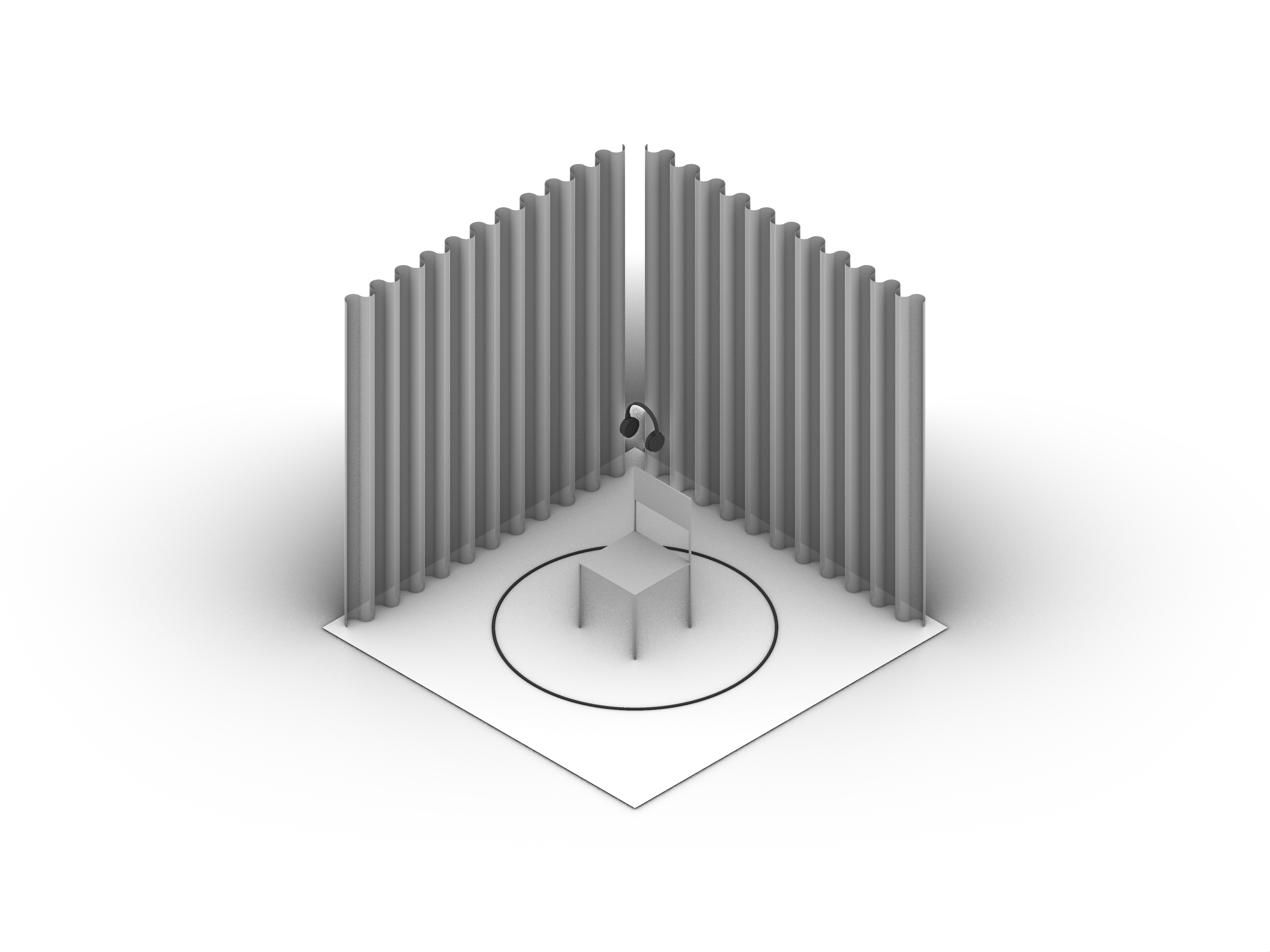

This piece features only two elements: sound and light. Visitors will be seated in a dark space and handed a pair of headphones equipped with a head tracker. When the experience commences, a rotating pattern on a ring of lights corresponds with the head movements of the visitor which are in turn synchronised with a collage of interweaving binaural recordings. The visitor will be able to control the temporal qualities of the recordings in addition to the moving shadows cast on the surrounding environment with a few simple movements of the head.

Project by Carlos García Fernández and Ismael Rasa

Keio Media Design, Embodied Media - Keio University

This piece features only two elements: sound and light. Visitors will be seated in a dark space and handed a pair of headphones equipped with a head tracker. When the experience commences, a rotating pattern on a ring of lights corresponds with the head movements of the visitor which are in turn synchronised with a collage of interweaving binaural recordings. The visitor will be able to control the temporal qualities of the recordings in addition to the moving shadows cast on the surrounding environment with a few simple movements of the head.

Project by Carlos García Fernández and Ismael Rasa

Keio Media Design, Embodied Media - Keio University

The installation utilizes a combination of sensors, lighting, and audio hardware to create an immersive, interactive experience. A head-tracking sensor (Bluetooth Nx Head Tracker) detects the visitor’s movements, which in turn modulates both the lighting and soundscape in real time.

A circular array of LED lights, controlled by an Arduino microcontroller, generates shifting patterns of illumination and shadow, reinforcing the perception of movement and space. High-quality binaural headphones deliver spatialized sound, immersing participants in a layered auditory experience of the JR Yamanote Line. The synchronised combination between these hardware components enables a seamless interaction between the visitor’s motion, dynamic lighting, and evolving soundscape.

A circular array of LED lights, controlled by an Arduino microcontroller, generates shifting patterns of illumination and shadow, reinforcing the perception of movement and space. High-quality binaural headphones deliver spatialized sound, immersing participants in a layered auditory experience of the JR Yamanote Line. The synchronised combination between these hardware components enables a seamless interaction between the visitor’s motion, dynamic lighting, and evolving soundscape.

The installation relies on Arduino, Pure Data, Max MSP, and NxOSC to create a dynamic interplay between light and sound, responding to user movement in real time. Head-tracking data is transmitted via NxOSC to Pure Data, which then distributes this data to both Arduino and Max MSP, ensuring synchronized audiovisual interaction.

Pure Data uses the comport external (a Pure Data external for serial communication) to send data to Arduino, which controls a circular array of lights. The system dynamically adjusts brightness and rotation speed based on visitor movement, generating shifting shadows that reinforce the sensation of motion and spatial immersion.

Simultaneously, Max MSP processes and spatializes the binaural audio, manipulating playback speed and directionality in response to head movements. This allows the layered sound collage—composed of field recordings from the JR Yamanote Line—to evolve in real time, shaping a responsive and immersive listening experience.

Pure Data uses the comport external (a Pure Data external for serial communication) to send data to Arduino, which controls a circular array of lights. The system dynamically adjusts brightness and rotation speed based on visitor movement, generating shifting shadows that reinforce the sensation of motion and spatial immersion.

Simultaneously, Max MSP processes and spatializes the binaural audio, manipulating playback speed and directionality in response to head movements. This allows the layered sound collage—composed of field recordings from the JR Yamanote Line—to evolve in real time, shaping a responsive and immersive listening experience.